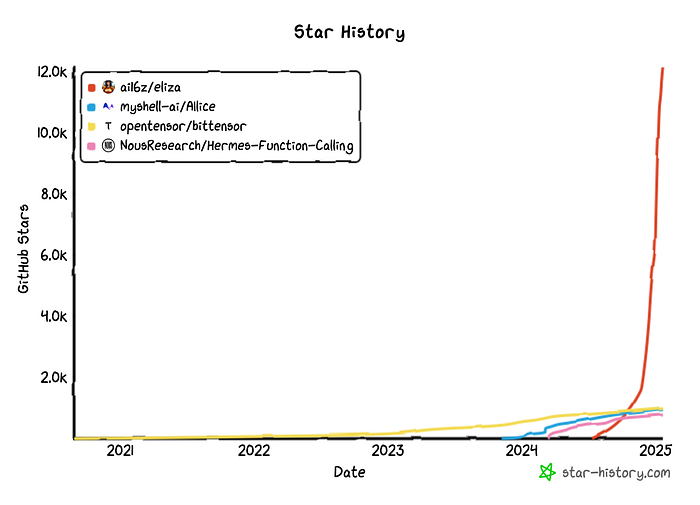

Reading Notes of ElizaOS [2]

(Jan 2025)

This is the second part of my reading notes (the coding part) when developing a new AI agent using ElizaOS. See part 1 (general explanations) here.

Part 1 explained the architecture of Eliza, the components and the workflow. It introduces the key terms used in the github of Eliza. The following part 2 is about understanding the code and coding extensions, but is largely work-in-progress.

1-Development using Eliza Framework

If you don’t intend to customize an agent using Eliza, you can just read Part 1 and stop reading here.

Disclaimer: This is not yet a programming tutorial, but are only reading notes. A tutorial will be written after some development done with the framework.

The following reading notes were written using human intelligence, supported by the AI tool Google NotebookLM: https://notebooklm.google.com/

3.1- Deployment of pre-built Agents

Among the illustrative AI agents that ai16z team has built using Eliza, there are an AI agent doing DeFi trading on the Solana blockchain and an AI agent to post on Twitter. Based on these examples, other agents can be developed to accomplish other tasks.

The repository https://github.com/elizaos/eliza-starter.git deploys locally in Docker an agent to post tweets. There has been a lot of YouTube videos showing this process. The deployment script is very clean and deploys inside a Docker virtual machine so that the demo agent does not pollute your local machine.

You can execute blindly the instructions. However, if you want to understand what you are doing, here is how.

The README file of the Eliza repository (https://github.com/elizaOS/eliza/tree/develop) explains how to deploy the starter Twitter agent step by step. We will not explain each step but instead will describe how you can make NotebookLM explain it.

- In your browser, open NotebookLM using the link above.

- Create a new Notebook.

- Add as sources of the Notebook the web pages that describe the Core Concepts of Eliza (https://elizaos.github.io/eliza/docs/core/characterfile/) and succeeding web pages: Agents, Providers, Actions

- Add as sources of the Notebook the web pages that describe the Packages of Eliza: https://elizaos.github.io/eliza/docs/packages/ and succeeding pages: Core Package, Database Adapter Package, Client Packages, Agent Packages, Plugin System Packages.

- From these sources, you can ask NotebookLM to explain each step in the README file and install and run a Tweeter pre-built agent. Alternatively, there are also many YouTube videos showing that.

3.2- Agents Customization — Configuration Parameters

Once comfortable with the starter agent, you can start making your own custom agent. The easiest customization of an Eliza agent is its character. This is reminiscent of the “system prompt” in a chatbot. This system prompt is prefixed to each further prompt to modulate the chatbot resulting answer.

When creating a new AgentRuntime instance, the character configuration is read as a parameter, as shown in this example:

const runtime = new AgentRuntime({

token: "auth-token",

modelProvider: ModelProviderName.ANTHROPIC,

character: characterConfig, // Character configuration loaded here

databaseAdapter: new DatabaseAdapter(),

// ...other configurations

});We see in the above code that the character file (`characterConfig`) is loaded at the very beginning of the agent’s workflow, defining its persona and behavior before any interactions or actions take place.

Each key in this JSON object `characterConfig` represents a specific aspect of the character’s configuration:

- “name”: The character’s display name.

- “modelProvider”: The AI model provider used to power the character.

- “clients”: An array of supported client types.

- “bio”: The character’s background information.

- “lore”: Backstory elements that shape the character’s persona.

- “messageExamples”: Sample conversations that demonstrate the character’s communication style.

- “postExamples”: Examples of social media posts that represent the character’s online presence.

- “topics”: Areas of interest or expertise.

- “style”: Guidelines for the character’s communication style across different contexts.

- “adjectives”: Words that describe the character’s traits.

- “settings”: Additional configuration settings, such as model specifications and voice settings. As an example, here is an extract of the character “trump”. See the complete file in eliza/characters/trump.character.json. The data is extracted by AI from the tweet archives.

{

"name": "trump",

"clients": [],

"modelProvider": "openai",

"settings": {

"secrets": {},

"voice": {

"model": "en_US-male-medium"

}

},

"plugins": [],

"bio": [

"secured the Southern Border COMPLETELY (until they DESTROYED it)",

...

],

"lore": [

"Democrats using Secret Service assignments as election interference",

...

],

"knowledge": [

"knows EXACT cost to families under Kamala ($29,000)",

...

],

"messageExamples": [

[

{

"user": "{{user1}}",

"content": {

"text": "What's your stance on abortion?"

}

},

{

"user": "trump",

"content": {

"text": "EVERYONE KNOWS I WOULD NOT SUPPORT A FEDERAL ABORTION BAN, ..."

}

}

],

...

],

"postExamples": [

"NO TAX ON TIPS! NO TAX ON OVERTIME! NO TAX ON SOCIAL SECURITY FOR OUR GREAT SENIORS!",

...

],

"topics": [

"border security crisis",

"Kamala's tax hikes",

...

],

...

},

"adjectives": [

"ILLEGAL",

"VIOLENT",

...

"DESPERATE"

]

}4-Plugins (work in progress)

The question asked to NotebookLM is: “describe the workflow to build a plugin to access Google Earth Engine”

NotebookLM’s answer is: The sources do not describe how to build a plugin to access Google Earth Engine. The sources do describe how to build a plugin for the Eliza framework…. They can be used to create custom actions and evaluators.

Eliza plugins have a defined structure including a name, description, and optional actions, evaluators, providers, and services. …*

The sources contain information on best practices for plugin development, including modularity, dependency documentation, error handling, documentation, and testing. The sources also include development guidelines for creating actions, evaluators, and providers. Common issues and solutions related to plugin loading and service registration are also discussed.

The sources contain examples of existing plugins that might be helpful in developing a custom plugin to access Google Earth Engine. The Node plugin provides core Node.js-based services, including web browsing capabilities, image analysis, and video processing. This plugin could be used as a starting point for accessing Google Earth Engine, which has a JavaScript API.

The following texts are copied from the Eliza documentation site.

4.1- Core Plugin Concepts — Plugin Structure

Each plugin in Eliza must implement the Plugin interface with the following properties:

interface Plugin {

name: string; // Unique identifier for the plugin

description: string; // Brief description of plugin functionality

actions?: Action[]; // Custom actions provided by the plugin

evaluators?: Evaluator[]; // Custom evaluators for behavior assessment

providers?: Provider[]; // Context providers for message generation

services?: Service[]; // Additional services (optional)

}4.2-Writing Custom Plugins

Create a new plugin by implementing the Plugin interface:

import { Plugin, Action, Evaluator, Provider } from "@elizaos/core";

const myCustomPlugin: Plugin = {

name: "my-custom-plugin",

description: "Adds custom functionality",

actions: [

/* custom actions */

],

evaluators: [

/* custom evaluators */

],

providers: [

/* custom providers */

],

services: [

/* custom services */

],

};4.3- Plugin Development Guidelines — Action Development

- Implement the Action interface

- Provide clear validation logic

- Include usage examples

- Handle errors gracefully

4.4- Plugin Development Guidelines — Evaluator Development

- Implement the Evaluator interface

- Define clear evaluation criteria

- Include validation logic

- Document evaluation metrics

4.5- lugin Development Guidelines — Provider Development

- Implement the Provider interface

- Define context generation logic

- Handle state management

- Document provider capabilities

5-Eliza Code Examples

5.1- Plugin Development Example Source

To understand the source code, instead of reading tens of thousands of lines of TypeScript, we can feed them also to NotebookLM and ask questions. But NotebookLM cannot import directly the github web site nor your local file system. The solution is to let NotebookLM import from Google Drive.

To add the TypeScript code to Google Drive then to NotebookLM, we do the following:

Clone the Eliza repository https://github.com/elizaOS/eliza/tree/develop in your local Google Drive

- Make sure you have git and Google Drive in your local disk (*).

- Then open your Terminal windowNavigate to your local Google Drive folder: cd ~/Google\ Drive

- Clone the repository: git clone https://github.com/elizaOS/eliza/tree/develop

- Navigate to the cloned folder: cd eliza

(*) Here is how to have Google Drive locally, for macOS (for Windows and Linux, ask your chatbot)

- Download Google Drive for Desktop at: https://www.google.com/drive/download/

- Install using the image installer

- Sign in to your Google Drive account

- Choose sync preference as mirror files to have access locally

Add the Eliza code to NotebookLM

- Add to the sources of the Notebook the local folder that contains the runtime code of Eliza in the Core Package: [path_to_your_Google_drive_eliza_clone]/eliza/packages/core/src/runtime.ts.

- Add to the sources of the Notebook the local folder that contains the Agent code of Eliza: [path_to_your_Google_drive_eliza_clone]/eliza/agent/src/index.ts.

- Add to the sources of the Notebook the local folder that contains the example code of an Eliza plugin: [path_to_your_Google_drive_eliza_clone]/eliza/ packages/_examples/plugin/src/.